I appeared to then have an orphaned DVS switch. I resolved this by following a series of steps to match up the VSM and vCenter extension keys. Note that when you perform these steps, and reconnect the VSM to the dvs switch, the VSM will sync its port profile configuration with the dvs. If you have the running config backed up, now would be a good time to load that config into the VSM. If its a fresh VSM, you'll obviously lose all of the port profiles.

- SSH into Nexus 1000V. Issue a show svs connections to get the svs connection name to the vCenter server.

- Enter config mode. Type in svs connections [vcenter connection name]

- Type in no connect

- Exit back into conf t

- Locate the extension key for the orphaned dvs switch in vcenter. This is under the summary page for the dvs switch. In the screenshot, you can see it is Cisco_Nexus_1000V_1351344349

- In config mode on the Nexus 1000V, enter vmware vc extension-key Cisco_Nexus_1000V_1351344349 (this number will obviously vary)

- Save config, reboot the nexus.

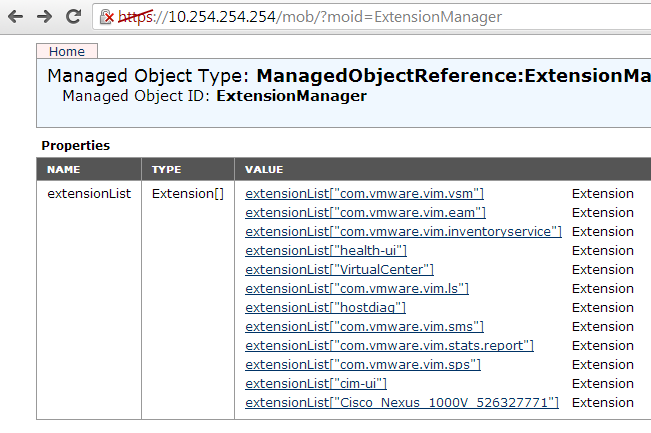

- Delete the current vcenter extension key. Browse to https://vcenterIP/mob/?moid=ExtensionManager

- You should see a key ID which is different on the page, then the key from the isolated dvs switch. Copy this key so you can unregister it.

- Click unregister extension. Copy and paste in the key from step 9. Click Invoke Method. It should say void if this is successfull.

- Restart vCenter.

- Now you need to get the extension key from the Nexus 1000V for the dvs switch you are recovering and import it into vCenter. Browse to http://VSM IP address. Right click save on the Cisco Nexus 1000V Extension xml link

- Open the vcenter client. Click on plugins, manage plug ins. Right click, add new plugin and upload the xml file.

- Go back to the Nexus 1000V. In config mode, type in svs connections [vcenter connection name]. Type in connect. The connection to vcenter should go up now that the extension key matches on both sides. If you browse back to the vcenter extensions page, you'll now see the correct extension key ID.

You can also confirm your nexus 1000V connection to vcenter. You should now have regained control over the previously orphaned DVS. The VSM and dvs should also have now synced configurations.

At this point, if you want to entirely remove the vds, and start by scratch by pulling the vem software off esxi, deleting the VSMs, and starting over fresh, you'll want to get the vds out first.

On the Nexus 1000V, go into conf t, svs connection [vcenter connection name] then enter in no vmware vds. This will delete the vds from the vmware side.

At this point, if you want to entirely remove the vds, and start by scratch by pulling the vem software off esxi, deleting the VSMs, and starting over fresh, you'll want to get the vds out first.

On the Nexus 1000V, go into conf t, svs connection [vcenter connection name] then enter in no vmware vds. This will delete the vds from the vmware side.